Overview

The Voice-Controlled Interface for Digital Musical Instruments (VCI4DMI) is a system driven solely by voice for the real-time control of electronic musical instruments, including sound generators (synthesizers) and sound processors (effects).

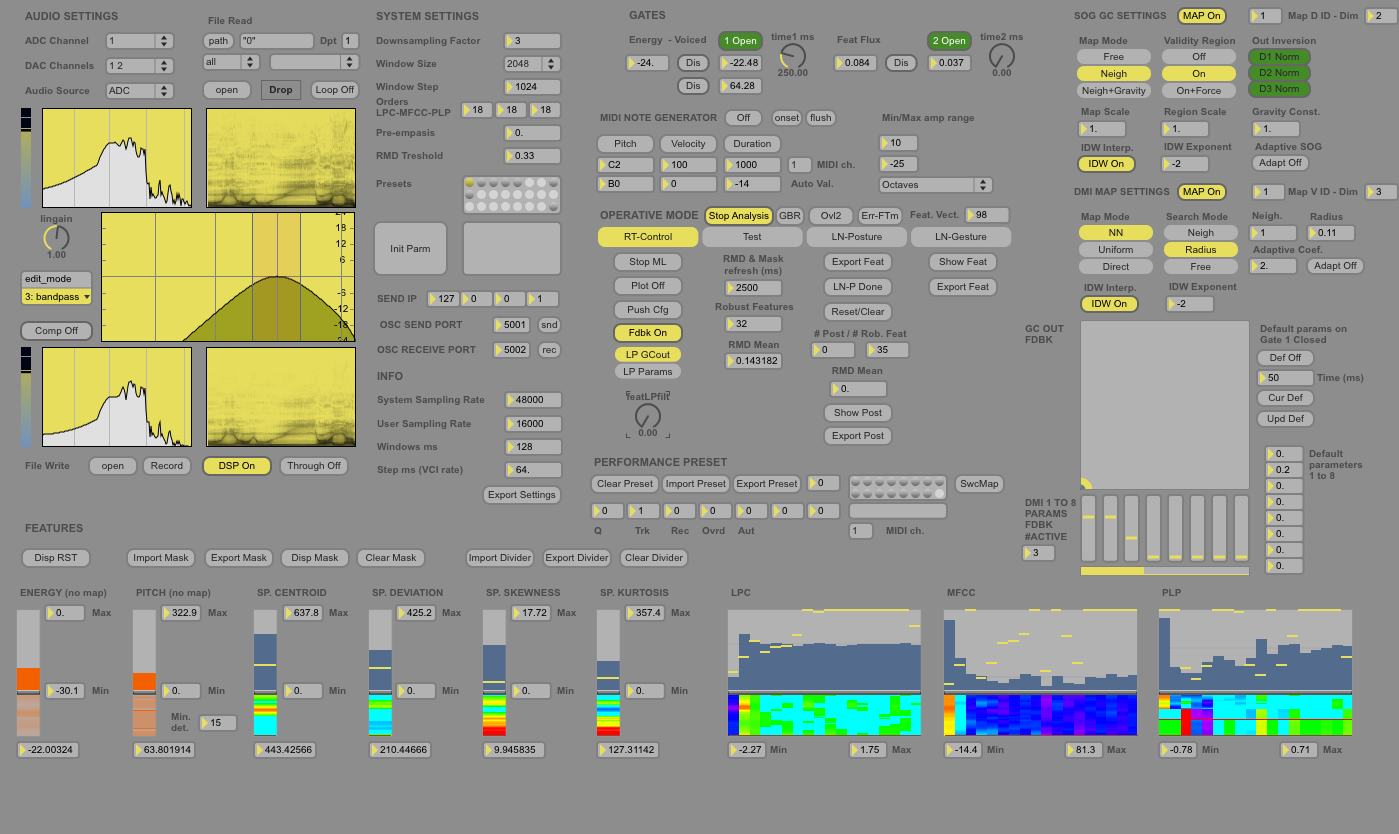

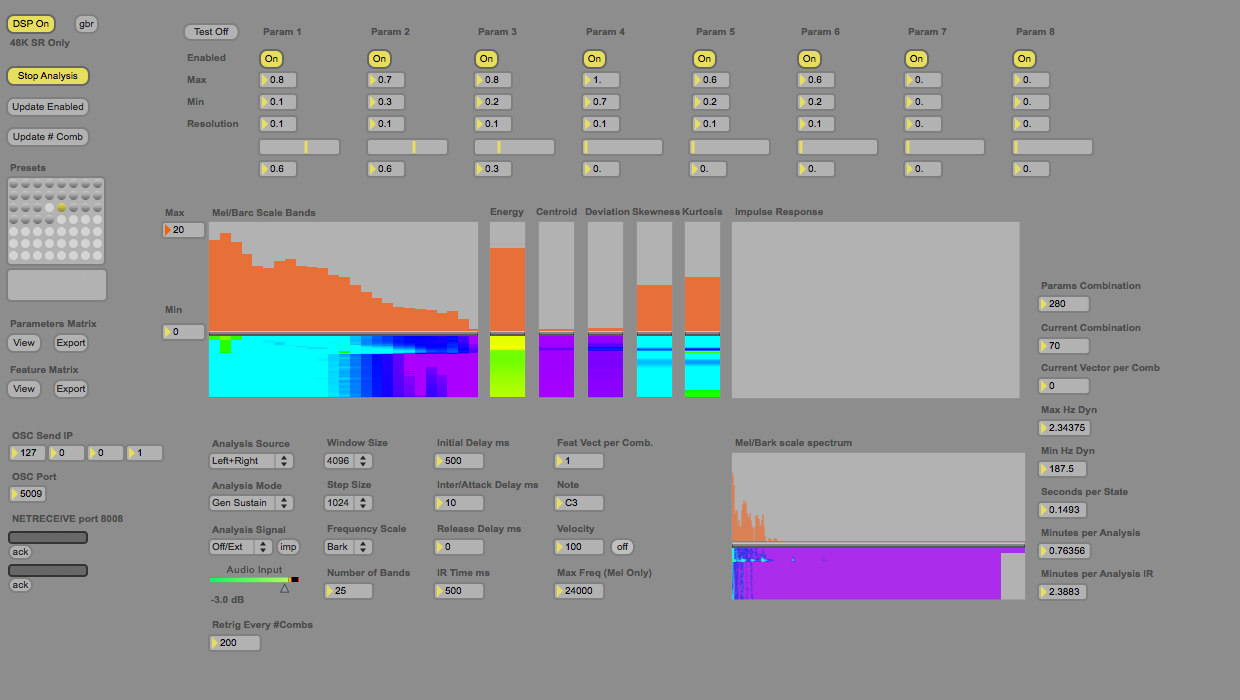

The VCI4DMI implements is a generic and adaptive method to map the voice to digital musical instruments real-valued parameters, and it is based on several real-time signal processing and off-line machine learning algorithms for producing and using maps between heterogeneous spaces, with the aim of maximizing the interface expressivity and minimizing the user intervention in the system setup. The VCI4DMI comprises a technique that learns to extract robust, continuous and multidimensional gestural data from specific performer’s voice, optimizing the computation towards noise minimization and gesture accentuation; a framework to model analytically the relationship between the variation of an arbitrary number of parameters and the perceptual sonic changes in any deterministic synthesis or processing instrument; a strategy to minimize the dimensionality of instrument control space that retrieves discontinuity-free parameters from a reduced sound map; and a dual-layer generative mapping strategy to transform gestures in the vocal space into trajectories in the instrument sonic space, which confers linear perceptual response and topological coherence, while maximizing the breadth of explorable sonic space over a set of instrumental parameters. The use of unsupervised mapping techniques minimizes the user effort in setting up the system.

Generic and Generative Unsupervised Adaptive Mapping from Voice to Instrument Timbre

The VCI4DMI provides a novel end-to-end solution to implement ad hoc vocal interfaces, engendering new paradigms in musical performances that better exploit creativity and virtuosity. The voice is processed at sub-verbal level, to provide minimal response latency, thus the continuous variation of the timbre of the voice determines the interaction with the instrument. Target of the voice control is an arbitrary number of real-valued instrument parameters that, when changed, alter the sound synthesis or processing algorithm, resulting in a modification of the generated sound timbre or texture. The user provides the system with voice varying (vocal-gesture) and constant (vocal-posture) examples and identifies an arbitrary number of instrument parameters target of the vocal control. The system analyzes the relationship parameters-to-sound of the instrument, creating a sonic map, analyze the voice example to identify robust and independent control signals, find a case specific transformation between these that maximize the breadth of explorable sonic space by voice. These information are used in the runtime interface mapping, where the timbre of the live voice input is transformed in typically two to four control signal, representing an instantaneous position a multi dimensional space. This is translated into a position in the instrument sound map, which identifies a unique sound that the instrument will generate, associated with a unique set of parameters that are fed instantaneously to the instrument. In addition the system can trigger the instrument sound synthesis producing monophonic MIDI note on/off messages from the live voice onset detection. Moreover key, velocity and duration can be fixed or automatically related to pitch and energy computed from the voice. The system can be used as an extension to other controllers, modulating instrument parameters by voice while leaving the note playing to traditional hand-based interfaces, or as alternative, conducting both tasks by voice with an obvious monophonic limit.

The VCI4DMI was selected as one of the 25 the semi-finalist at the Margaret Guthman Musical Instrument Competition 2014 edition, which registered a record of submitted works. To date the VCI4DMI had been used in several live performances. Theoretical and technical details are available in the related publications. The current VCI4DMI fully functional prototype is implemented in Max and MATLAB. A set of MaxForLive devices allows to host analyze and perform with instrument hosted in Ableton Live. The VCI4DMI source code is published under LGPL3 License.

The VCI4DMI was conceptualized, designed and developed in the framework of my Ph.D. research project in Integrative Sciences and Engineering, with the mentorship of Prof. Lonce Wyse.

S. Fasciani, “Voice-Controlled Interface for Digital Musical Instruments,” Ph.D. Thesis, National University of Singapore, 2014. [PDF link1 – PDF link2]

VCI4DMI Download

git clone https://github.com/stefanofasciani/VCI4DMI

svn checkout https://github.com/stefanofasciani/VCI4DMI

Related Publications

S. Fasciani and L. Wyse, “A Voice Interface for Sound Generators: adaptive and automatic mapping of gestures to sound,” in Proceedings of the 12th conference on New Interfaces for Musical Expression, Ann Arbor, MI, US, 2012. [PDF]

S. Fasciani and L. Wyse, “Adapting general purpose interfaces to synthesis engines using unsupervised dimensionality reduction techniques and inverse mapping from features to parameters,” in Proceedings of the 2012 International Computer Music Conference, Ljubljana, Slovenia, 2012. [PDF]

S. Fasciani, “Voice Features For Control: A Vocalist Dependent Method For Noise Measurement And Independent Signals Computation,” in Proceedings of the 15th International Conference on Digital Audio Effects, York, UK, 2012. [PDF]S. Fasciani and L. Wyse, “A Self-Organizing Gesture Map for a Voice-Controlled Instrument Interface,” in Proceedings of the 13th conference on New Interfaces for Musical Expression, Daejeon, Korea, 2013. [PDF]

S. Fasciani and L. Wyse, “One at a Time by Voice: Performing with the Voice-Controlled Interface for Digital Musical Instruments,” in Proceedings of the NTU/ADM Symposium on Sound and Interactivity 2013, Singapore [PDF], extended for eContact! 16.2, 2014. [HTML]

S. Fasciani and L. Wyse, “Mapping the Voice for Musical Control,” Arts and Creativity Lab, Technical Report, 2013. [PDF]

S. Fasciani, “Voice-Controlled Interface for Digital Musical Instruments,” Ph.D. Thesis, National University of Singapore, 2014. [PDF link1 – PDF link2]

Videos

- Runtime VCI4DMI Max Patch

- VCI4DMI Max/MSP Instrument Analysis Patch

- VCI4DMI Max For Live Front Ends and Back End

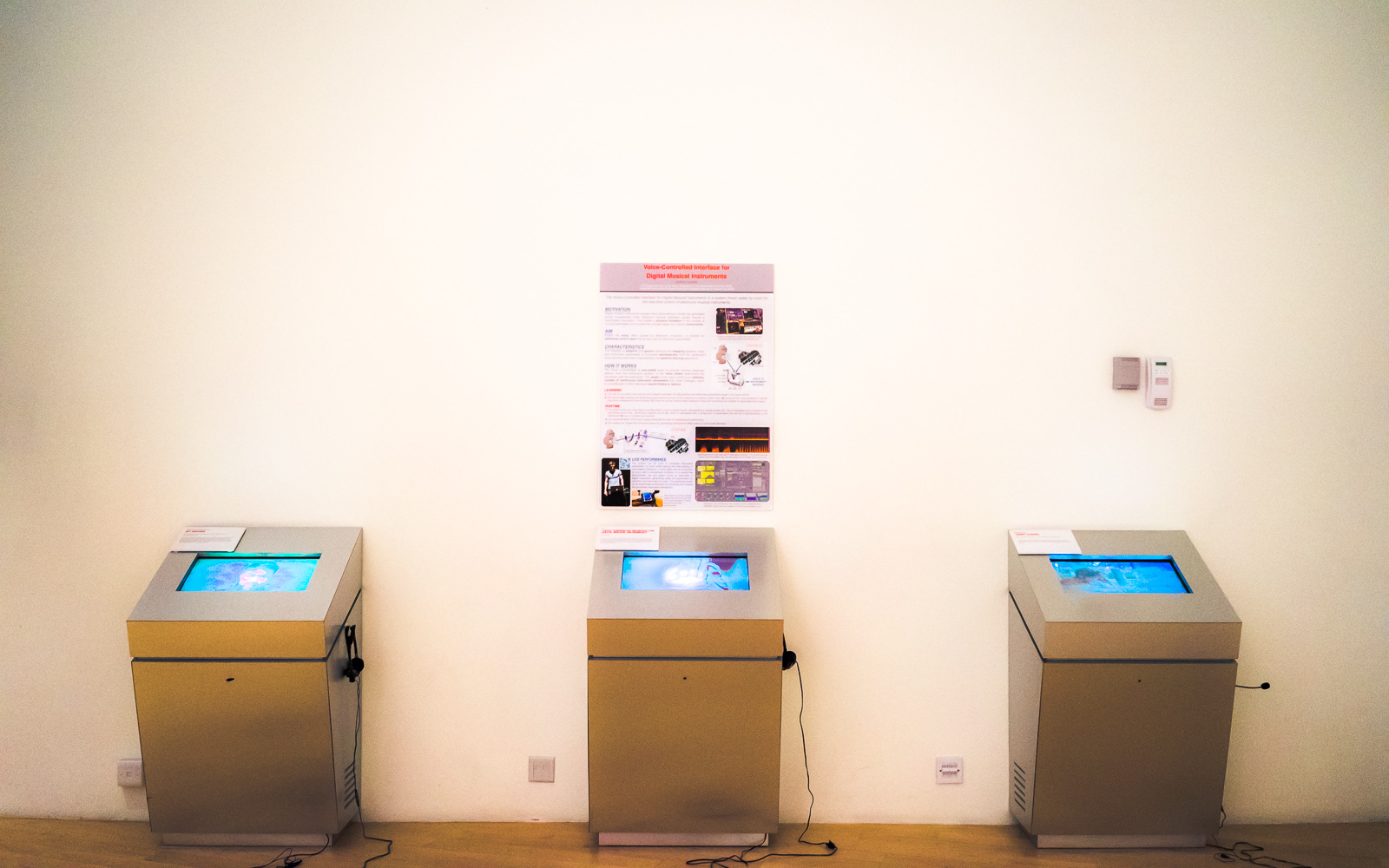

- Random Blends 2014 Installation at Singapore Art and Science Museum

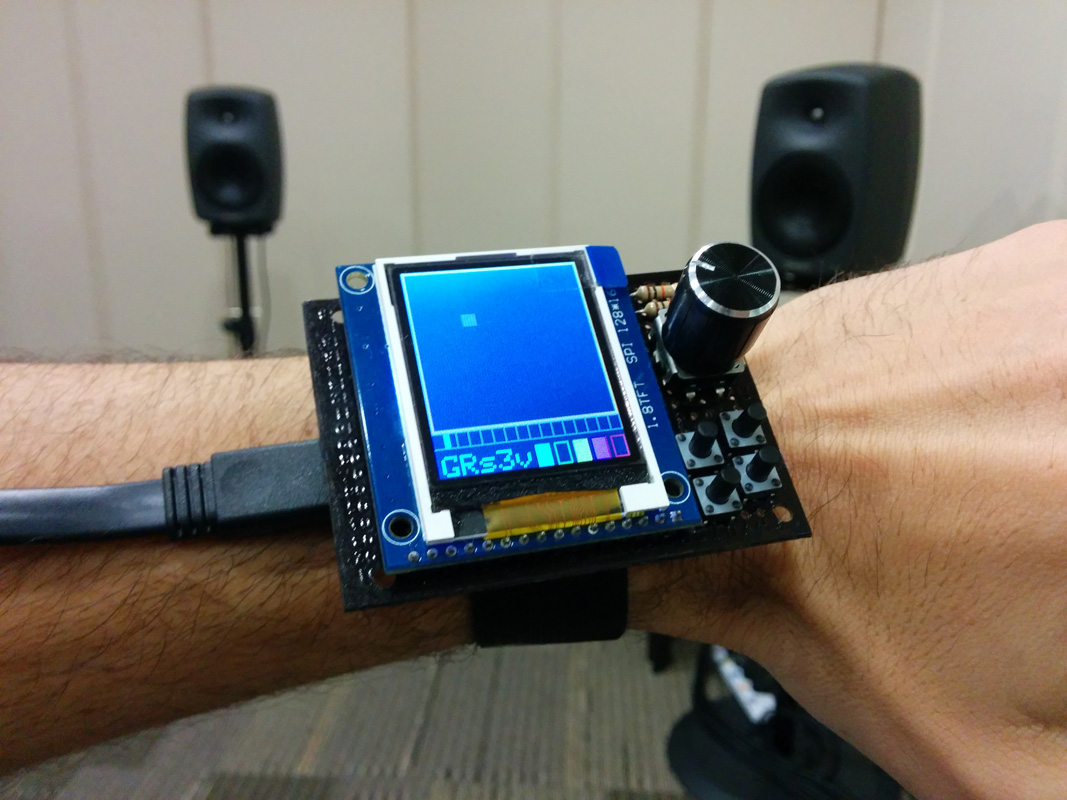

- VCI4DMI wrist controller

- “One at a Time by Voice” performance at SI 2013 Concert

- “One at a Time by Voice” performance at SI 2013 Concert

- Georgia Tech Guthman 2014 Competition performance

- Georgia Tech Guthman 2014 Competition performance

- VCI4DMI wrist controller

- Lindblad Electronic Music Meet 2014 Concert

- Lindblad Electronic Music Meet 2014 Concert